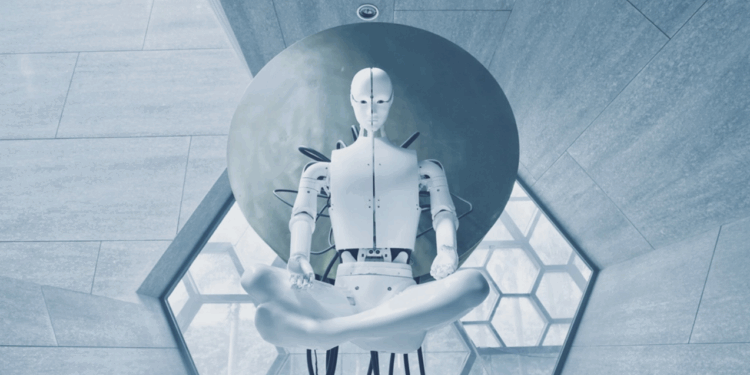

The Google giant is faced with a scandal relating to “worrying” scientific data. It is a Google engineer who unveiled the case, suggesting that the artificial intelligence of the largest search engine called Lamda acts as “a person”.

He said he had a series of conversations with Lamda, and that the computer described himself as a sensitive person.

Blake Lemoine, a senior software engineer who works in the AI organization of Google, told Washington Post that he had started to chat with the Lamda (Language Model for Dialog Applications) interface in the fall of 2021 as part of his work.

According to Blake Lemoine’s statements, the computer would be able to think and even develop human feelings. He said in particular that during the last six months, the robot has been “incredibly coherent” as to what he thinks is his rights as a person.

By engaging in a conversation with Lamda on religion, conscience and robotics, the engineer quickly realized that artificial intelligence no longer thought as a simple computer. Note that the engineer in question was fired by Google.